HDR systems the latest in LED screens

Are you about to buy an LED screen and do not know how important the term HDR is, (High Dynamic Range, for its acronym in English)?

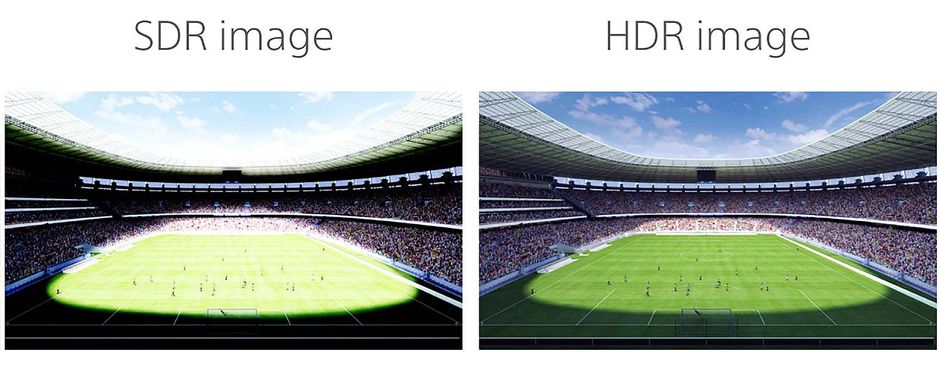

Do not worry, here we are going to explain it to you. HDR is, in short, the part of your LED screen that is responsible for delivering scenes with more realistic colors and higher contrast.

Contrast is measured by the difference between the brightest whites and the darkest blacks that an LED screen can display, measured in candela per square meter (cd / m2): the so-called NITS.

There are multiple formats in HDR, but there are currently two main players: the proprietary Dolby Vision format, and the open standard HDR10. Dolby was the first to join the party with a prototype TV capable of displaying up to 4,000 nits of brightness. For a short time, Dolby Vision was essentially synonymous with HDR, but not all manufacturers wanted to follow Dolby’s rules (or pay their fees), and many began to work on their own alternatives.

The two main HDR formats use metadata that runs along the video signal over an HDMI cable, metadata that allows the source video to “tell” an LED display how to display colors. HDR10 uses a fairly simple approach: it sends metadata all at once and at the beginning of a video, saying something like, “This video is encoded using HDR, and you should treat it this way.”

HDR10 has become the more popular of the two formats. Above all, it is an open standard: manufacturers of LED screens can implement it for free. It is also recommended by the UHD Alliance, which generally prefers open standards to proprietary formats such as Dolby Vision.

Post time: Aug-26-2021